Imagine reducing hours of manual brain MRI segmentation to seconds.

Brain tumor boundaries emerge automatically, and deep learning is making this possible. It brings precision, speed, and consistency to neuro-oncology workflows.

Deep learning leverages CNNs, attention U-Nets, and transformer hybrids to automatically segment multi-modal MRI scans. These models outperform classical methods in terms of speed, accuracy, and reproducibility, enabling precise tumor boundary delineation for clinical use across various settings.

Discover brain tumor MRI image segmentation using deep learning techniques: core architectures, datasets, and clinical impacts.

What Is MRI-Based Brain Tumor Segmentation?

MRI-based brain tumor segmentation splits MRI scans into labeled regions, separating tumor tissue from healthy brain structures. Each voxel is grouped by shared features, including-

- Intensity

- Texture

- Boundaries

They create a clear map of tumor subregions.

This automated grouping replaces guesswork with precise, data-driven labels. Segmentation is the critical first step for any downstream analysis.

In manual segmentation, expert radiologists painstakingly draw tumor outlines on each slice of the image. This slice-by-slice tracing can take hours per case and varies by reader.

Automated deep learning models learn to predict these outlines in seconds. Semi-automatic tools still need some user correction; fully automatic networks deliver end-to-end predictions without manual input.

A survey highlights that deep networks outperform classical methods in both speed and consistency.

Accurate segmentation drives better care.

- It supplies precise volume measurements for radiation therapy planning.

- It standardizes regions for radiomic feature extraction, yielding reliable biomarkers.

- In the operating room, clear 3D tumor maps guide surgeons to maximize resection while preserving healthy tissue.

Automated segmentation thus accelerates workflows and underpins personalized neuro-oncology.

Why Deep Learning for Brain Tumor MRI Image Segmentation

Classical image-processing techniques—such as thresholding and region growing—segment tumors by grouping pixels with similar intensities or spatial proximity. They struggle when tumor boundaries are fuzzy or when intensity overlaps with healthy tissue.

Such methods often require manual tuning of thresholds for each patient and fail in the presence of MRI noise or varying scanner settings.

Convolutional neural networks (CNNs) overcome these limits by learning feature hierarchies directly from the data. Early layers capture edges and textures; deeper layers encode complex tumor shapes and context.

This end-to-end learning replaces handcrafted rules with optimized filters. CNNs adapt to multi-modal inputs (T1, T1c, T2, FLAIR), automatically fusing information across sequences for richer representations.

The impact is clear.

- Deep models segment complete 3D volumes in seconds, compared to the minutes or hours it would take to do so manually.

- They deliver consistent results across cases and sites, boosting reproducibility.

- They integrate seamlessly with multimodal pipelines, handling any combination of MRI contrasts without requiring custom tweaks.

This speed and reliability accelerate clinical workflows, support strong radiomic analyses, and enable near-real-time guidance in the operating room.

Deep Learning Architectures In Brain Tumor MRI Segmentation

Deep learning architectures have transformed MRI segmentation by learning complex features directly from data. Find the key models driving this progress.

U-Net and Its Variants

U-Net introduced a symmetric encoder–decoder design with skip connections that shuttle fine-grained features from down-sampling layers directly to up-sampling layers. This lets the network recover spatial detail lost during pooling.

Early layers learn edges and textures; deeper layers capture high-level context.

3D U-Net extends this concept to volumetric data by replacing 2D convolutions with 3D ones. It processes entire MRI volumes in one pass, preserving inter-slice continuity and boosting accuracy on complex tumor shapes.

This volumetric approach demands more memory but yields more coherent segmentations across slices.

Attention U-Net

Attention U-Net augments the U-Net backbone with attention gates that re-weight feature maps based on their relevance to tumor regions. Spatial attention highlights key areas, while channel attention emphasizes the most informative feature channels.

This selective focus enables the model to ignore background noise and adapt to irregular tumor boundaries. Studies have shown that attention modules improve Dice scores, particularly for small or diffuse lesions.

Transformer-Based Models

Transformers bring self-attention to medical imaging, computing pairwise relationships between all voxels to capture long-range dependencies and global context beyond local convolutional receptive fields.

Pure transformer models can be data-hungry, so hybrid CNN-Transformer pipelines first extract low-level features via convolutions, then refine segmentation with transformer blocks. Early results indicate that these hybrids outperform CNNs on heterogeneous tumors and in regimes with scarce data.

MRI Segmentation Deep Learning: Datasets & Preprocessing

High-quality data is the backbone of any deep-learning pipeline. Proper preprocessing turns raw scans into reliable inputs for robust models.

Public Benchmarks

The Brain Tumor Segmentation (BraTS) challenge provides a standardized, multi-modal MRI dataset—T1, contrast-enhanced T1 (T1c), T2, and FLAIR—each expertly annotated into tumor subregions.

Researchers worldwide train and evaluate on BraTS to ensure fair comparisons and drive innovation.

Preprocessing Steps

- Intensity normalization – MRI scans vary in brightness and contrast, making it necessary to adjust for these differences. Techniques like Z-score normalization or Nyúl’s method rescale intensities onto a common range, helping networks learn consistent features across institutions.

- Skull-stripping – Automated tools in MRI remove non-brain tissue (scalp, skull, fat), focusing the model on brain anatomy and reducing false positives. This step uses brain masks to isolate relevant voxels.

- Data augmentation – Random rotations, scaling, elastic deformations, and intensity shifts generate varied training examples on the fly. Augmentation prevents overfitting and boosts generalization to unseen tumor shapes and imaging artifacts.

Patch-Based vs. Full-Volume Training

Patch-based training extracts smaller subvolumes (e.g., 80 × 80 × 80 voxels) using sliding windows. This accommodates GPU memory, enables oversampling of rare tumor regions, and balances class distributions. However, it may lose long-range context.

Full-volume training processes entire MRI scans in one pass (as in 3D U-Nets). It preserves spatial continuity and global context, leading to more coherent segmentations across slices. The trade-off is higher memory use and longer training times.

Together, these benchmarks and preprocessing steps establish a foundation for reliable and reproducible brain MRI segmentation models.

MRI Segmentation Deep Learning Evaluation Metrics

Multiple metrics capture different facets of segmentation quality, providing both volume accuracy and boundary precision.

Dice Similarity Coefficient (DSC)

DSC measures the overlap between the predicted segmentation and the ground truth. It ranges from 0 (no overlap) to 1 (perfect match). A higher DSC indicates better volumetric agreement, making it a primary benchmark for tumor segmentation tasks.

Hausdorff Distance

The metric captures the most significant boundary deviation between predicted and actual tumor contours. It reports the maximum distance any point on the predicted border lies from the closest point on the actual border.

Lower values denote tighter boundary alignment, which is crucial for accurate surgical planning.

Sensitivity & Specificity

Sensitivity (true positive rate) gauges how well the model detects tumor voxels. Specificity (true negative rate) measures how accurately it excludes healthy tissue. Balancing both prevents models from favoring one class, guaranteeing neither under-segmentation nor over-segmentation dominates.

Why Multiple Metrics Matter?

No single metric captures all aspects of segmentation quality.

- DSC excels at volume overlap but can hide boundary errors.

- Hausdorff Distance reveals edge mismatches but ignores volume overlap.

- Sensitivity and specificity highlight class balance issues.

Using all four ensures models perform reliably in clinical settings, supporting both accurate volume estimation and precise boundary delineation.

Key Challenges & Solutions

High variability in MRI scanners and protocols can confuse models if not addressed. Let’s unpack four core challenges and their solutions.

Scanner & Protocol Variability

MRI data come from different machines, field strengths, and imaging settings. These variations shift intensity distributions and noise profiles, causing models trained on one center to fail on another.

Domain adaptation techniques align feature spaces across different sites. Harmonization networks explicitly learn to map scans from diverse protocols into a common representation, thereby reducing “scanner bias” and enhancing cross-site performance.

Tumor Class Imbalance

Tumor tissue often occupies a tiny fraction of the brain volume, making positive examples scarce. Standard losses (like cross‐entropy) then bias models toward predicting healthy tissue.

Specialized loss functions such as focal loss down‐weight easy negatives, focusing training on tumor voxels. Boundary‐aware losses add penalties for misplacing tumor edges, improving contour accuracy in tiny or diffuse lesions.

Computational Demands

Volumetric models—especially 3D U‐Nets—require large GPU memory and long training times. This limits batch sizes and slows experimentation.

Efficient architectures cut parameter counts. Mixed-precision training utilizes lower-bit computations where safe, halving memory usage and doubling throughput without sacrificing accuracy.

Generalization & Robustness

Models can overfit to training-site specifics, failing on unseen data.

Rigorous cross-validation by patient and center tests the real-world performance. Multi-institutional training, whether through pooled data or federated learning, creates robust models. Extensive external testing ensures reliability before clinical use.

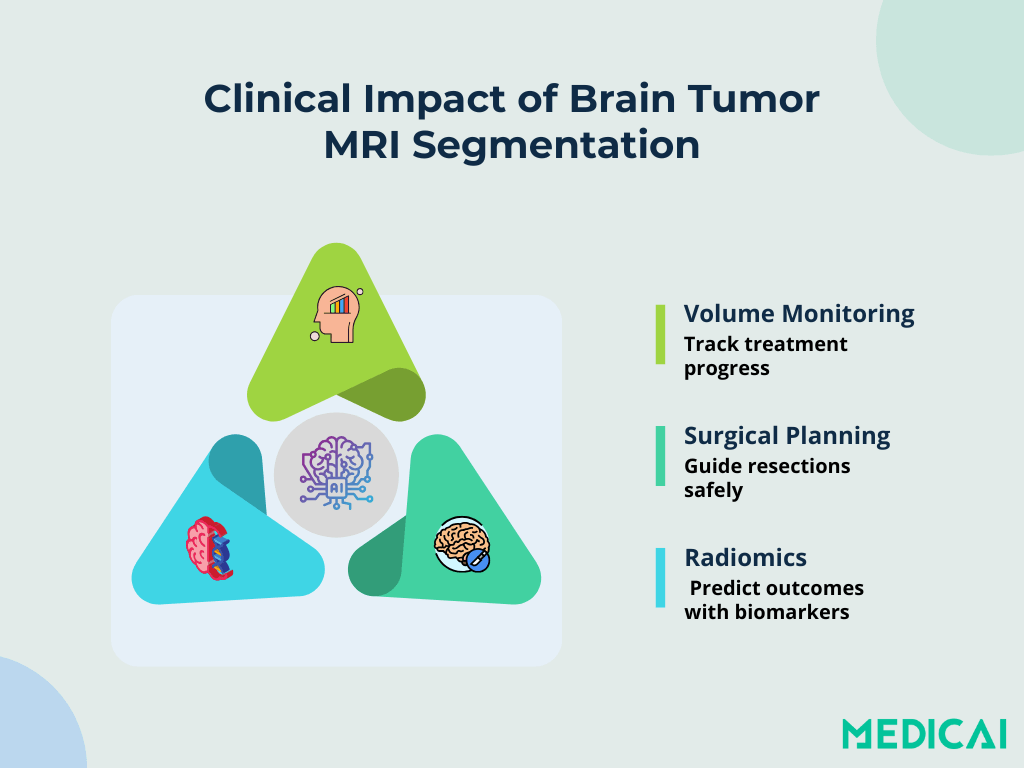

Clinical Applications & Impact

Segmentation underpins key clinical tasks in neuro-oncology. It turns raw MRI scans into actionable insights for personalized care.

Volumetric Growth Monitoring

Automated models quantify tumor volume across follow-up scans in minutes. Tracking volumetric changes enables oncologists to assess treatment response and adapt therapy plans. Precise volume measures reduce reliance on subjective estimates and enhance longitudinal studies.

Surgical Margin Estimation

Clear delineation of tumor borders guides neurosurgeons in planning resection margins. Three-dimensional maps constructed from segmentation help balance the removal of maximal tumor with the preservation of healthy tissue.

This reduces postoperative deficits and supports better functional outcomes.

Radiomic Feature Extraction

Once tumor regions are defined, radiomic pipelines extract quantitative features—texture, shape, intensity—that serve as imaging biomarkers. These metrics correlate with molecular subtypes, predict patient survival, and enable risk stratification.

Consistent, automated labels ensure that radiomic analyses remain reproducible across cohorts.

Integration into PACS & Treatment Workflows

Deep-learning outputs seamlessly feed into Picture Archiving and Communication Systems (PACS) provided by platforms like Medicai. Segmented images appear alongside raw scans in radiology workstations, allowing clinicians to review, adjust, and approve contours without switching platforms.

This integration accelerates reporting, supports multidisciplinary tumor boards, and streamlines the path from MRI imaging to intervention.

Conclusion

Deep learning has reshaped brain tumor segmentation. Modern models provide fast and precise maps of tumor boundaries. They cut hours of manual work to seconds. Automated pipelines support treatment planning, radiomic biomarker discovery, and surgical guidance.

Medicai’s platform brings these tools directly into your workflow. It integrates with your PACS setup and delivers consistent results across cases.