Artificial intelligence has shown impressive results in radiology research settings. From mammography to CT and MRI, AI models often achieve high accuracy when evaluated on curated datasets. Yet once deployed in real clinical environments, many of these same models struggle to maintain performance.

This gap between research success and clinical reality is frequently blamed on “model quality” or “insufficient data.” But growing evidence suggests a deeper issue:

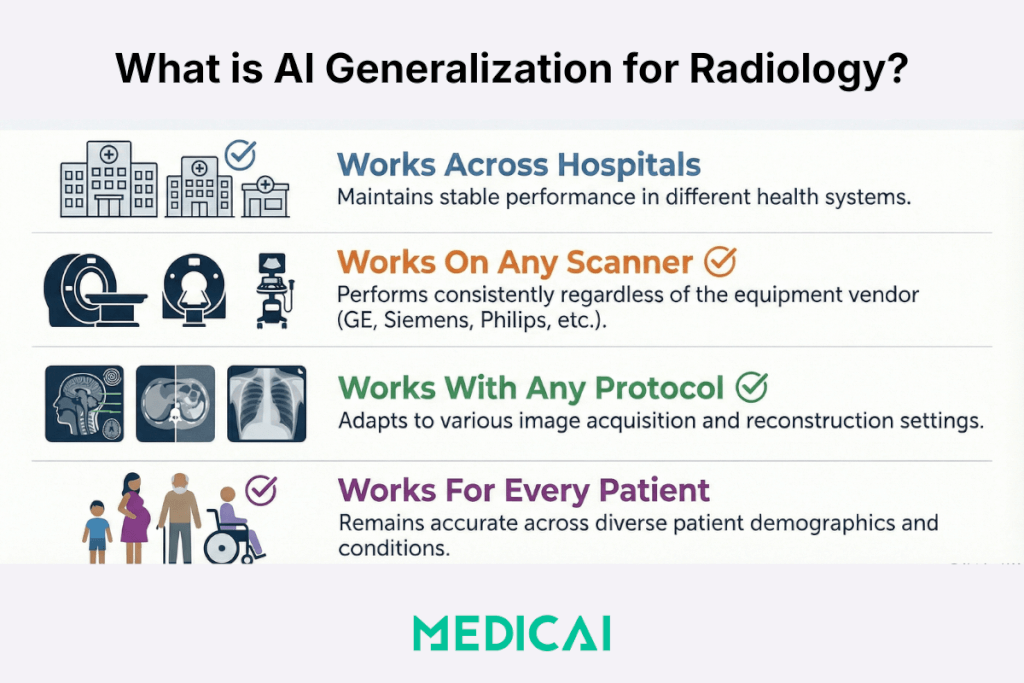

AI generalization in radiology is fundamentally an infrastructure problem.

Without the right imaging foundation, even the most advanced AI models will fail to perform reliably at scale.

The Hidden Reason Radiology AI Fails to Generalize

Radiology images are not uniform inputs. They are shaped by a complex mix of:

- Scanner vendors and hardware generations

- Acquisition parameters (kVp, mAs, slice thickness, compression force)

- Reconstruction algorithms and post-processing

- Institutional protocols and workflow practices

- Biological variation across patient populations

These factors create what researchers call batch effects—systematic differences in image appearance unrelated to pathology but that strongly influence AI outputs.

When AI models are trained on limited or homogeneous data, they implicitly learn these hidden patterns. As soon as the model encounters images acquired under different conditions, performance can degrade.

This is why AI that looks “clinically ready” in one setting may fail in another.

What Recent Mammography Research Reveals

A recent PLOS ONE study focusing on mammography highlights this challenge in detail. Rather than presenting final diagnostic results, the study proposes a controlled experimental framework to understand why AI models exhibit inconsistent behavior.

By repeatedly imaging ex vivo human breast tissue under systematically varied acquisition settings—and pairing imaging data with biological and histological analysis—the researchers aim to isolate how:

- acquisition parameters alter radiomic features, and

- biological tissue properties further influence AI interpretation

The takeaway is clear: image variability is not noise—it is a dominant signal that AI models must contend with.

This finding has implications far beyond mammography. Similar variability exists across CT, MRI, and other modalities.

AI Generalization Is an Infrastructure Problem, Not Just a Model Problem

Most AI discussions focus on algorithms, architectures, and training datasets. But AI does not operate in isolation. It depends on the systems that ingest, store, distribute, and contextualize imaging data.

When imaging infrastructure is fragmented or inconsistent, AI models lose the contextual information they need to generalize safely.

Key gaps often include:

- Missing or inconsistent metadata

- Poor traceability of acquisition parameters

- Siloed image archives across sites

- Limited ability to validate AI outputs longitudinally

In short, AI can only generalize as well as the infrastructure supporting it.

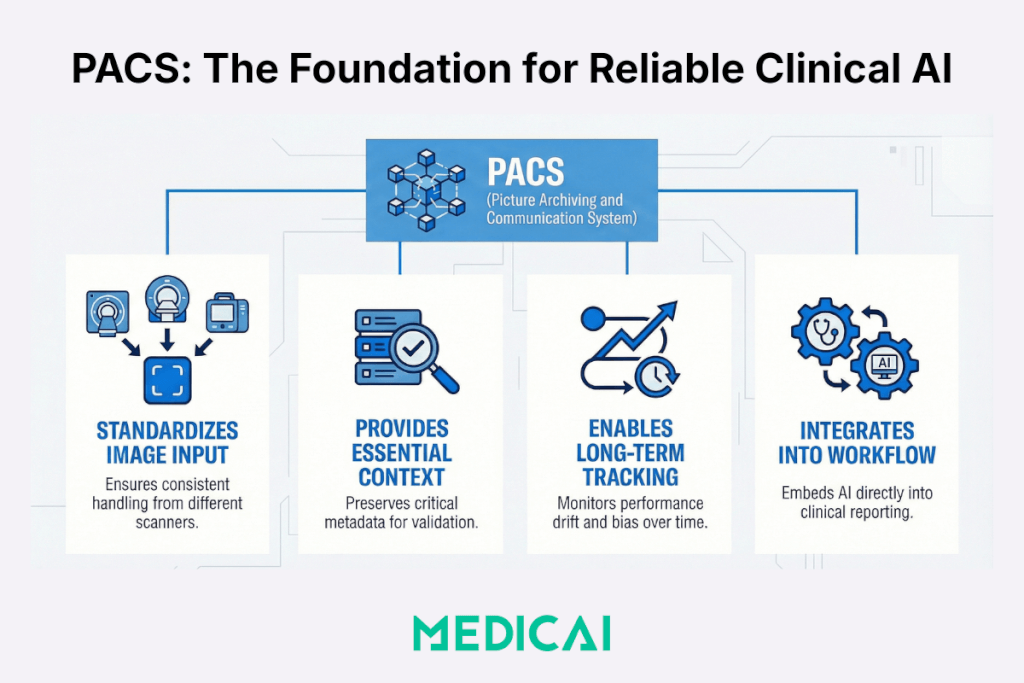

The Role of PACS in Making AI Clinically Reliable

PACS has traditionally been viewed as an image storage system. In the era of AI, it plays a much more active role.

Standardized Image Ingestion Across Vendors

A modern PACS ensures consistent handling of images from different scanners, modalities, and sites. This reduces uncontrolled variability at the point of ingestion.

Metadata Traceability and Context

Acquisition parameters are not peripheral details—they are essential context for AI interpretation. PACS systems that preserve and expose this metadata make it possible to audit, validate, and refine AI performance.

Longitudinal and Cross-Site Access

AI models must be evaluated over time and across populations. PACS provides the longitudinal access required to monitor drift, bias, and real-world performance.

Workflow Integration

AI embedded into PACS and reporting workflows benefits from real clinical context, reducing the risk of disconnected or misleading outputs.

Why Standalone AI Tools Struggle Without Infrastructure Support

Many AI tools are deployed as standalone applications layered on top of existing systems. This approach introduces several risks:

- Fragmented workflows and context switching

- Limited feedback loops for performance monitoring

- Inability to track real-world outcomes

- Increased clinical and regulatory risk

Without infrastructure-level integration, AI becomes difficult to govern and even harder to trust.

What “AI-Ready” Imaging Infrastructure Looks Like

AI readiness is not defined by how many algorithms a system supports, but by how well it enables reliability and scale.

AI-ready imaging infrastructure typically includes:

- Cloud-native PACS capable of multi-site operation

- Vendor-agnostic image handling

- Rich metadata preservation and access

- Structured workflows and reporting

- Secure collaboration and auditability

This foundation allows AI to be validated, monitored, and improved continuously—rather than treated as a one-time deployment.

How Medicai Supports AI Generalization in Practice

At Medicai, PACS is designed as an imaging infrastructure for modern care delivery, not just storage.

By focusing on:

- cloud-native, multi-site PACS

- standardized image ingestion

- structured workflows and reporting

- integration points for AI and analytics

Medicai enables organizations to deploy AI in a way that reflects real-world variability rather than ignoring it.

This infrastructure-first approach helps reduce the gap between promising AI research and dependable clinical performance.

What This Means for Radiology and IT Leaders

For CIOs, CMIOs, and Radiology Directors, the implication is straightforward:

Before asking which AI model to deploy, ask:

- Is our imaging infrastructure consistent and traceable?

- Can we monitor AI performance across sites and time?

- Are acquisition parameters and workflows standardized enough to support generalization?

AI adoption without infrastructure readiness risks creating fragile systems that perform well only on paper.

Conclusion: PACS Is No Longer Passive Storage

AI generalization is one of the defining challenges in medical imaging today. The evidence increasingly shows that solving it requires more than better models.

Imaging infrastructure—especially PACS—is the foundation that determines whether AI is reliable, scalable, and safe in clinical practice.

As radiology continues to adopt AI, success will belong to organizations that invest not just in algorithms but also in the systems that enable those algorithms to function reliably in the real world.